In our previous article, we covered how to improve your productivity with Generative AI on the now platform. In this article, we will discuss and deep dive into the groundbreaking capabilities of the ServiceNow Generative AI controller.

Introducing Generative AI on the Now platform

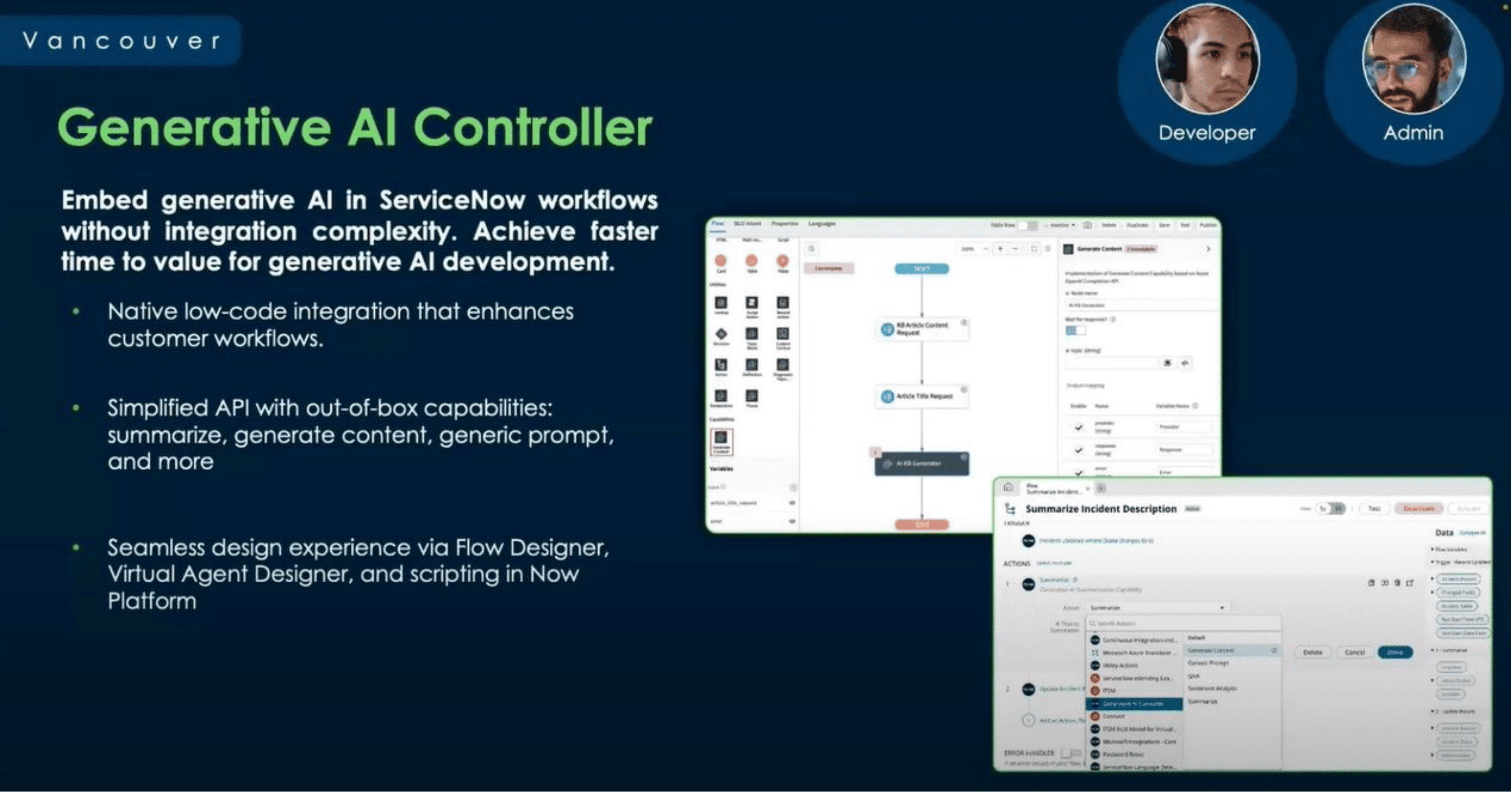

The Generative AI Controller streamlines integration with available Generative AI providers. ServiceNow has revealed upcoming use cases for Generative AI, including case summarization and text-to-code. Notably, the Generative AI Controller is set to launch with the ServiceNow Platform Vancouver release. This exciting development will enable seamless connectivity to OpenAI, Azure OpenAI, or ServiceNow large language models, allowing the direct embedding of Generative AI into ServiceNow workflows.

Notably, the Generative AI Controller is set to launch with the ServiceNow Platform Vancouver release. This exciting development will enable seamless connectivity to OpenAI, Azure OpenAI, or ServiceNow large language models, allowing the direct embedding of Generative AI into ServiceNow workflows.

Accelerate Time-to-value with Generative AI Controller

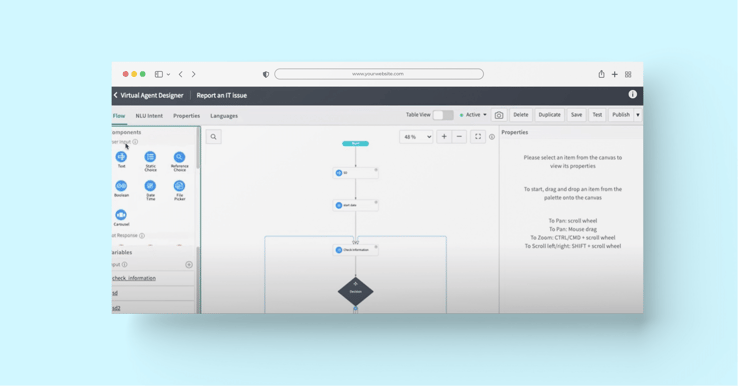

1. Enhance the design experience in Flow Designer, Virtual Agent, and scripting.

2. Seamlessly integrate into the Virtual Agent Designer user interface.

3. Leverage out-of-the-box use cases such as summarization, content generation, Q&A, and generic prompts.

4. Access functionalities across various builder interfaces, such as Virtual Agent Designer, Mobile App Builder, Flow Designer, and scripting. Generative AI Controller: Create a Powerful Experience

Generative AI Controller: Create a Powerful Experience

The Generative AI Controller utilizes the Integration Hub for connections. It contains embedded Integration Hub spokes connecting to third-party Large Language Models (LLM) service providers. Integration Hub transactions include LLM transactions from a ServiceNow production instance (except those from sub-prod instances). Using OpenAI as a provider, ServiceNow automatically applies its moderation API.

For Azure OpenAI, we employ their home-grown moderation. However, the data and queries you make from the app are sent to OpenAI and Azure OpenAI. Note their data privacy policy and decide what usage policy best fits your organization.

Although we have taken steps to reduce the likelihood of hallucinations through selective use cases and prompt engineering, there still exists a potential for inaccuracies in the generated content. Therefore, we strongly recommend incorporating human-in-the-loop review for such content to mitigate these issues effectively.

INRY supports the GPT-3, GPT-3.5 turbo, and GPT-4 models. Remember that the cost depends on the provider and the output length. For example, OpenAI charges $0.002/1k tokens for GPT 3.5 turbo.

INRY can help you harnesses the power of AI where it matters most, now it's your turn to seize the opportunity. Let us guide you towards a future filled with AI-driven possibilities. Get started with INRY today!